In my previous blogpost related to Sentinel IP geolocation I introduced a method to map IP addresses to IP address ranges from an external data source to provide an IP geolocation feature. The method shown in the previous post is the one I use most of the time. But it is not without drawbacks. Thus, in this second part of the series, I am going to showcase some additional solutions for the same problem

I tackled the biggest challenge in the previous post which was an effective and scalable way to map an IP to an IP range. The need arose since IP geolocation databases store the geolocation information of an IP range or subnet and not of a specific IP. I provided a method that can be used by anyone to solve this challenge. But again, even that solution had some cons.

I stored the data in an external file and processed it via the externaldata command. This means the data was stored outside of Sentinel. I usually like to keep the data as close to the processor function (Sentinel in this case) as possible. Also, the constant file read from an external location can be expensive.

Even though I provided a way to effectively match an IP to an IP range, it still makes our life a little bit harder, since the queries and rules are going to be more complex. (Read my related post for details)

So, here are some other methods one can use. Please be aware, my number one recommended solution is the one you can find in the other blog post. Because of this, I won’t provide any code to use the methods below.

X. Watchlist

One option is to store the geolocation data in a watchlist instead of storing it on an external site/storage and using it via the externaldata operator. At least in theory. In practice, this does not work due to some limitations in Sentinel watchlists

Limitations:

- The total number of active watchlist items across all watchlists in a single workspace is currently limited to 10 million -> The geolocation database I used contains fewer entries than this. It only has 3 million data points in it. So, this is not a hard limit, but if you use other bigger watchlists then this can limit you.

- File uploads are currently limited to files of up to 3.8 MB in size. -> My database is way bigger than this. It is somewhere between 200-300 MB after processing.

So, this is not a viable option for my database. But you can use it if you have a smaller file that can fit into 3.8 MB. Like something only for your country, or something that only stores countries.

2. Pushing logs into a table once a week

Numbering starts from 2, since this is the 2nd viable solution. The first one is in the previous blog post.

Instead of using the previous solutions (from this post and the previous one), it is also a logical method to store this data in a table in Log Analytics Workspace (Sentinel).

You can create a Function App that downloads the IP geolocation database, processes it - so we can use it in our query -, and then forwards the logs to Sentinel. Databases (at least free ones) are rarely updated, so downloading and ‘updating’ the values once a week should be sufficient most of the time. By storing all the IP ranges and their geolocation info in a table you would use approximately 200 MB of storage per week (if you push this data once a week).

So, the three steps:

- Get the geo information. There are a ton of different geolocation databases out there. They can be accessed in different ways and formats.

- Process the data. Again, processing the data depends on your choice of geolocation information. My database arrives in a .zip file, so I have to extract it and then modify it according to my previous post.

- Forward the data to Sentinel. This is pretty easy because Microsoft provides a skeleton code to achieve this.

This way, instead of querying and downloading an external file you can just query a table in Sentinel.

The benefit of this solution:

- Everything is stored inside Sentinel (Log Analytics Workspace).

- It is fairly cheap. Pushing 200-300 MB of data to Sentinel costs around 1 USD depending on the region. (Running Function App can add some additional costs, but with proper code, it won’t be significant. Also, you can use other solutions too to push data to Sentinel that may be cheaper.)

- As an MSSP you only need the Workspace ID and its Key of your client to push this data to its Sentinel. This is way easier than providing access to an external file to each customer, but preventing access from anybody else, and managing this.

Issues with this solution:

- Each event in a Sentinel table has a TimeGenerated value. This is the value that is used by the Analytics rules to limit the covered time window. The problem is that a rule can cover the last 14 days, so you have to push the logs to Sentinel at least every 2 weeks.

- Related problem is that even if the data is forwarded more frequently, you will still need to broaden the defined time windows in your rules. For example, you have a rule that checks sign-in logs from the one last hour and you want to map them to their geolocation. Because geodata can be older, you actually have to configure the rule on the GUI to cover the last iteration of event pushing to the IP geolocation table. If the geolocation logs are pushed to Sentinel every 14 days, then your rule has to cover the last 14 days. This can be solved by pushing the data more frequently, like each day. In this case, your rules only have to cover 1 day in order to work. Considering how cheap is 200-300 MB of events, pushing it into Sentinel once a day will cost less than 10 USD a week. But, you still have to consider this during rule creation.

- You still have to use an IP to IP-range correlation in your queries and rules, so the KQL logic is going to be more complex.

- You cannot use it in an NRT rule to correlate it with another table. Correlating two tables, in general, is not allowed in an NRT rule. But in this case, the ingestion time is also going to be old, and NRT rules can only process recently ingested logs.

Storing all the IPs in an external file and then downloading over and over again to be used with the externaldata operator was not feasible in the previous post. So, storing all the IPs instead of ranges in an external file is not a good solution. But with this solution, one can decide to calculate all the IPs from the provided IP ranges and store those entries in a Log Analytics Workspace table. This will take approximately 300 GB. Pushing this huge amount of data into Sentinel once a week or a few times a week could work at a big company. For smaller companies though, this is a huge cost overhead. So, I do not recommend it. It is only here as a comment.

3. Pushing only the required data into a LAW table

This solution is similar to the previous one. We get the data and we just forward it to the Log Analytics Workspace. The difference is that this option does not forward all the information preemptively, it only forwards the data once it is required.

To achieve this, you can use the same code as in the previous method, but you have to add an additional step. You can’t just process the data and send it. You need a step to query the log analytics workspace to find the IPs in the logs. If you want to cover the last 30 mins (30 mins is what I used during my tests), you have to execute your code every 30 minutes and check the IPs from the last half an hour. The code is more complex because you have to define each table you want to parse the IPs from and you also have to define a logic of how to parse those IPs. Regex parsing can be really heavy both in Log Analytics Workspace or Function App when we are talking about a huge amount of data. Parsing like this on raw logs is also not possible in Log Analytics Workspace.

The benefit is that in this case, the heavy-lifting can be done in the code. At the end, we will have IP-geolocation info pairs instead of IP range-geolocation pairs. IP to IP matching is trivial. And if you decide to store the IPs instead of the ranges in the table, you won’t even meet the challenge I tackled in the previous post (IP to IP-range correlation mapping).

Benefits:

- It is possible to store IP-geolocation mappings instead of IP range-geolocation pairs since we can do the parsing and processing in the Function App. Since we only store the IPs that are found in the logs, the cost is going to be smaller compared to a situation in which we store all the existing IPs.

- Because we can store IPs instead of ranges, our rules and queries can be simpler.

- The ingestion cost can be lower. The cost depends on the number of IPs found in the logs. Storing only the ranges needs 300 MB based on the file I used. If we store the IPs we will need between 0 MB (no IPs at all) and 300 GB (all the existing IPs) storage. But usually, this option is cheaper from an ingestion cost point of view and you are going to be closer to the 0 MB than to 300 GB.

Drawback:

- Way more complex Function App, that can cause some additional costs.

- Cannot be used in an NRT rule.

- I only execute this code every 30 mins, so for some IPs, the geolocation data can be 30 minutes late. Thus, rule execution needs to be delayed by 30 mins if geolocation information is used by the given rule.

- Only IPs from the logs are going to be processed and stored in the geolocation table. If an investigation requires SOC to look up an IP that is not in any of the other tables, they won’t be able to carry out the task. Thus, this solution is not optimal for investigations.

- There can be a specific situation in which this solution is a good choice, but most of the time I would not go with this option. Due to the added complexity and the unpredictable cost-saving, this solution is generally not recommended.

Cost implications

The steps needed by any of the methods can be carried out in Azure. So, I did a calculation to see how much it would cost to deploy these things in Azure.

You can use the Azure Cost Calculator to check it yourself, here I’m only going to provide some high-level explanation. !!!link

For the calculations I use the East US location. Currency is USD

Blob Storage cost

For the first method in the previous post, you can use blob storage to store your CSV file. This file is approximately 300 MB. In case of Blob storage, you have to pay for the following actions:

- Storage: Just for comparison purposes, the default setting on the Cost Calculator portal is 1000 GB. We only need 300 MB. 300 MB cost less than 1 cent/month.

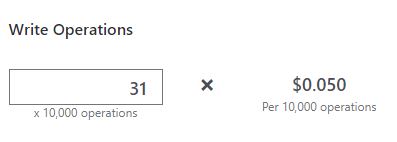

- Write: Every time we process a new version of the geolocation database, we have to carry out 1 write action. One update per day is 31 write actions per month. Again, for comparison purposes, this value is provided /10000 execution. We need way less than this. So, the cost will be less than 1 cent/month.

- Read: This one depends on how frequently we read this data. There can be tens of thousands of reads per day. With 10k read per day, the cost at the end of the month is going to be 0.12 USD.

Function App

If you use the Consumption-based option, you have to pay according to this:

Memory used x Execution time x Execution number

- Memory: During my test I could keep the memory usage below 60 MB. But even if you process the whole file in memory, you can still be under 384 MB. Function App also needs some additional memory by default so I used 640 for the calculation.

- Execution time: I don’t have an exact number here. My code finished in less than 10 mins on my machine. So, I decided to use 1 hour execution time for Function App. (Function App times out quicker than this by default. Be aware of this.)

- Execution number: For the last solution I pushed data to Sentinel every 30 mins. This would result in 1488 execution in a month.

This would result in a $47.17 bill at the end of the month. I don’t think this is too expensive, but can be problematic for smaller companies. The coding is up to you here, so you can create more effective or more expensive codes too. That fully depends on your needs.

Ingestion cost

In East US you have to pay 4.3 USD/GB ingested. So, here are some scenarios:

- Pushing the whole ranges into Sentinel is 300 MB. Doing it each day for a month will result in 40 USD.

- Pushing all the IPs instead of ranges is 300 GB. This would be 1290 USD per execution.

- Pushing only the IPs found in other tables is really up to your environment so I can’t provide an estimation there.

This is just a high-level estimation. Your cost can vary based on your environment, automation, and usage. You can have a really expensive solution but also a completely free one.

Cheap-ass option

You don’t have to go with any of the above-mentioned solutions. If you want to, you can have a completely free (or close to free) solution.

- Instead of blob storage you can just upload the file to a free repository or any website you already own. Important to ensure that the file download/read is not rate-limited in the chosen storage.

- Instead of Function App you can just execute your PowerShell code on-prem on a machine. If you update this file once a week you can even do it manually on your laptop during your coffee break (- do not do it manually).

- By using the first method (from the previous blogpost) you don’t have to ingest any data to Sentinel, so your actions won’t incur any ingestion cost.

Practically free!

There is also an option in the externaldata operator to use zip files. This means instead of the raw 200 MB CSV file you can just upload a 30 MB zip file with the CSV in it and Sentinel can still handle it. If you have a cap on downloads on your site/repo you can use this to allow 10x as much download than you had with the normal CSV file. To do this, just zip the CSV file, upload it, and use the externaldata operator with this setting: (format=“csv”, zipPattern="*.csv") !!!

Comparison

| Using externaldata operator | Pushing every range into a table | Pushing only the IPs found in the logs into a Table | |

|---|---|---|---|

| Storage | External file | Table (in Sentinel) | Table (in Sentinel) |

| NRT rules | yes | no | no |

| Rule / Query complexity | medium | medium | simple |

| Investigation capabilities | Usable | Usable | Limited usage (some IPs can be missing from the table) |

| Function App complexity | not needed | low | high |

| Ingestion cost | zero | low | low - high |

| Additional costs | Processing of the geo db / File storage / File access and read | Processing of the geo db | Processing of the geo db |

| Longevity | Remains usable forever | Events will be removed due to retention period and they are not usable for more than 2 weeks in a rule | Events will be removed due to retention period and they are not usable for more than 2 weeks in a rule |

| Recommended | yes | yes | no |

+1 recommendation

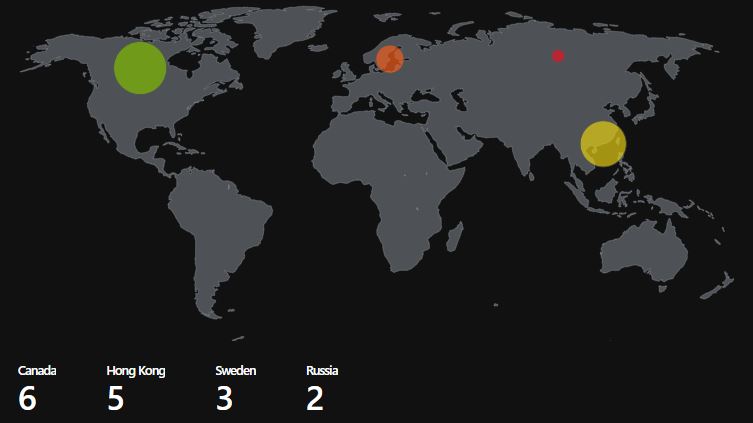

Do not forget that Sentinel also has a function to show the countries on a map. This can be really helpful to showcase malicious locations and to provide a quick overview for investigations.

Update 2022-05-27

I used this solution instead of the ipv4_lookup plugin because that plugin was really slow in case of huge IP database files. My database file contained around 3 million entries, while ipv4_lookup was designed to work well with smaller files like 100k entries. Also, ipv4_lookup does not work with IPv6 addresses.

Unfortunately, since then Microsoft has made some changes in Sentinel and now this query is not working anymore. Previously, this code finished in less than 10 seconds with 100k entries in a table and 3 million entries in my geo database. But now with way less entries in the table, it is terminated after 5-10 seconds of execution due to high resource usage.