IP Geolocation is the association of the IP address of a device to its geographic location. This location can contain various types of information like the related country, city, area code, longitude, latitude, and so on. This information is very valuable but a lot of logs with IP in them do not have the geolocation stored in them. At this point, even Sentinel does not offer a built-in solution to enrich these logs during ingestion or even after ingestion in a scalable way. Because this functionality is a frequent request from clients, I decided to put some time into the investigation of this problem.

Read the update first at the end of the article. This solution is not recommended to be used anymore due to some changes in Sentinel.

Enriching events in Sentinel is not possible. Once a log is pushed to Sentinel, it can’t be modified anymore. The logs being unchangeable is a serious security requirement. With this, one can ensure that the logs are available the way they arrived in the SIEM, and they are going to be available the same way in the future before their removal. On the other hand, this also means there is no option for us to change a log after it reaches Sentinel.

Since there is no option to modify data in any way in Sentinel, here are the options we have:

- Some of the logs already contain this information. When this is the case, we are lucky, because we do not have to do anything. Even when they are not there by default, some security tools let us enable this feature. Unfortunately, most of the logs and tools are not like this.

- Use an intermediary system to enrich data. Regardless of whether we use a Syslog forwarder or a PowerShell script to receive and send data, there are plugins and solutions out there which can enrich the logs. Once the geolocation info is added to the events on the relay system, it can be forwarded to Sentinel.

- Even when none of the previous solutions are good options, it is still possible to collect geolocation information and store it next to the logs. Meaning, instead of modifying the original logs, we can just store the geodata in a different place and then correlate it with the logs with the IP in them.

If we are lucky enough and our logs fit into the first group, there is nothing to do.

If you can use the second option for your logs, then that is the way to go. Good for you. Even though sometimes this method is not feasible when it can be used, it can be a cheap and effective solution. Also, having the geolocation in the log itself makes the KQL queries more effective in Sentinel.

On the other hand, creating a new table or processing external files is always an option. But this third option comes with its challenges that I am going to explore and address. But be aware, there is no single best answer to this problem.

Using an external resource

There are two solutions based on external resource usage I am going to introduce in this post. Both can be used in specific situations while they are less attractive in some others.

In this case, by external resource I mean the information is stored outside of the event and not in a field in the log itself, may it be in another table or an external file in a repo.

Options:

- External file (externaldata): Store the data in an external file outside of Sentinel. You can use a repo for this, blob storage, or any other file storage solution. Usually, geolocation data is not a secret, so even storing it in a public repo is fine most of the time.

- Data table: Storing the IP address and geolocation mapping in a data table (log source) in Sentinel.

For the sake of brevity, I am only going to explore the first option in this blog post. The other one is going to be introduced and explained in the next blog post.

Geo Location information in externaldata

The ‘externaldata’ operator in Sentinel can be used to load data from a file from any available websites or external sources. In my solution, I use this operator to load the IP geolocation CSV into Sentinel and then process it with my query.

So, the first thing that we need is an IP geolocation database. There are a lot of them out there on the internet. Paid ones are potentially more precise, so possibly you want to buy a commercial one if you want to use it in your production environment. For testing or low-budget deployment, you can go with a free one too. During this deployment, I decided to use the IP2Location Lite database.

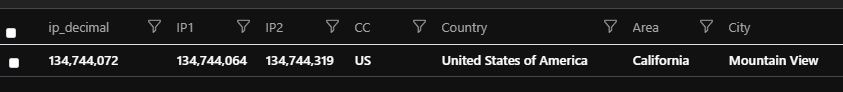

IP2Location stores the data in a CSV which is easy to process with the externaldata operator. This database has the IP address ranges stored in their decimal format, which makes it easy to decide whether an IP is in the range or not. Here are some examples:

“222625792”, “222658559”, “NL”, “Netherlands”, “Noord-Holland”, “Amsterdam”

“222658560”, “222691327”, “IE”, “Ireland”, “Dublin”, “Dublin”

“222691328”, “222707711”, “HK”, “Hong Kong”, “Hong Kong”, “Hong Kong”

The first decimal in the first line is 222625792, which is the IP 13.69.0.0. The second decimal 222658559 is 13.69.127.255. This means every IP between 13.69.0.0 and 13.69.127.255 is related to Amsterdam. If you transform an IP to its decimal format and it is between the two decimal numbers in the line, then it is tied to the given location.

In my solution I use this format because it is really simple to use. If your database is formatted differently then you should either adapt your queries or programmatically modify the database.

IP2Location also provides an API key that can be used to download their database. With some minimal coding, you we create an automated process that downloads the IP2Location database, extracts it, (modifies it - see later,) and uploads it to a repository where Sentinel can reach it.

So, we have all the prerequisites. We have an IP geolocation database, and we obviously have some logs in Sentinel with IP in them (or we will have at some point). Therefore, the only thing left is to correlate the database with our logs based on the IP fields in them. And now, the challenges to be tackled.

Challenges

User-defined function

In Sentinel, we can create our own parameterized function. A function like this can be used to create reusable code and to query information ‘dynamically’. It is fairly simple to put together a function that can return the geolocation data for a provided IP address from a CSV.

|

|

First, I load the IP geolocation data from the external repo to my Sentinel into the ‘ipranges’ variable.

Then, define the function that will be called later. With the ‘extend’ operator I transform the IP to its decimal format and then with the ‘where’ operator find the line that this IP is in range of.

In the last line, simply call the function to provide the geolocation information of the requested IP. An example output (for a different IP):

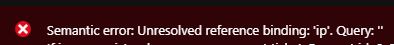

Unfortunately, there is a limitation in user-defined functions. User-defined functions that return a tabular expression can’t be invoked with an argument that varies with the row context (same for passing similar info into a toscalar() invocation). And the IP I want to get the geolocation info for is in the row, in the event I’m processing during an execution. Due to this limitation, I cannot use a user-defined parameterized function like this.

So, if I use this code at the end to dynamically gather the geoloc info for each IPs:

|

|

Then, I either get a tabular expression error or if the return value is a scalar (after modification) then I get the following one:

Thus, this solution can be good for manual data lookup or investigation, but it cannot be used by Analytic rules.

Join based on a common field

When we want to correlate two logs then the trivial way to go is the ‘join’ operator. Join helps us find matching entries based on a common field and value. So, the only thing we need is a field and value found in both log types.

So, we have a data table with IPs in it and we want to enrich these IPs with geolocation data. We also have the IP geolocation database (in CSV) that we want to use to enrich the first table. Unfortunately, one can see that we do not have matching fields in these two tables. The first one contains IPs while the database contains IP ranges (upper and lower bound of the range).

Join can only be used if there is an exact match. It is not possible to correlate an IP with an IP range. I want to find out in which range of the second table the IP from the first table is. Again, this is not possible with ‘join’.

In the geolocation database, we have all IPs covered. But instead of a fix IP we get the lowest and highest IP of the range for each entry. In theory, we could just go through all the ranges, calculate all the IPs for each range and store them that way. While this is possible in theory, in practice there are way too many IP addresses to store all of them.

My collection contains approximately 3 million ranges, and it takes up 200 MB. This means, storing all the IPs in a similar format would take approximately 300 GB (less with some optimization). Even though this is not an impossible size, downloading/accessing a file like this during each rule execution is not viable in practice.

So, right now there is no matching field and value in the two tables, and we also can’t just generate all the IPs to use that value as common information in the two sources.

Cartesian product (dummy value)

But if we cannot use all the IPs due to their huge number, we can just generate a dummy field with a dummy value in it and use it as a common field.

Cartesian product of table A and table B is the set of all ordered ‘a’, ‘b’ pairs, where ‘a’ is an entry from table A and ‘b’ is an entry from table B. So, we are matching all entries from table A to all entries from table B.

Sentinel does not have an option to create a Cartesian product (no full join), so to achieve this, we have to use a dummy field. We can create a field called ‘dummyfield’ with the value ‘dummyvalue’ in it for each entry for both tables. In the end, we just join them together based on the ‘dummyfield’. Because each entry contains the same value in this field, everything from table A will be matched with everything from table B.

Table A:

| Field A | Field Dummy |

|---|---|

| Peter | dummyvalue |

| Julia | dummyvalue |

Table B:

| Field B | Field C | Field Dummy |

|---|---|---|

| Test | Win | dummyvalue |

| Dev | Linux | dummyvalue |

Cartesian product of A and B using Field Dummy:

| Field A | Field B | Field C | Field Dummy |

|---|---|---|---|

| Peter | Test | Win | dummyvalue |

| Peter | Dev | Linux | dummyvalue |

| Julia | Test | Win | dummyvalue |

| Julia | Dev | Linux | dummyvalue |

Everything is paired with everything.

So, the Kusto query:

|

|

First, the query downloads the file from the repo and parses it. Then the ‘let’ statement creates a new ‘table’ to add the dummy field to it. Then the KQL adds the same dummy field to the LogsWithIPs table. Now, we have a common field with matching values, so we can map the entries from the two tables to each other.

With the last line, the code checks which entry from the database contains a lower limit smaller than our IP and a higher limit higher than our IP (or equal). This is the range that contains the IP, so this is the location of the IP.

Even though the solution works, it is too heavy for practical use. In the geolocation database CSV file, I have 3 million entries. The size of the other table varies from scenario to scenario, but in my case, it had approximately 15k IPs in 1 hour (I execute my relevant rules once every hour).

It is possible to reduce these numbers by excluding internal IP ranges and some other IPs you are not interested in.

With these numbers, the Cartesian product creates 3 million x 15k new events. Kind of a lot. Sentinel times out after 10 minutes for a normal query and based on my experience above 200 events in the non-geolocation table and 3 million entries in the geodatabase the query is not going to produce results.

With almost 3 million entries this is how the execution time changed as I increased the logs in the table:

| Events in the table | Query execution time |

|---|---|

| 20 | 52 seconds |

| 50 | 2:31 |

| 100 | 4:50 |

| 150 | 9:42 |

| 200 | timeout |

So, you can use this option for smaller queries with few IPs in them, but the solution is not scalable.

Join based on bags

We have a working solution at this point, but it needs optimization. In the previous version of the code, we used really dummy dummy values. So, in that version, each entry contained the same ‘dummyvalue’ value. Therefore, every event from table A was paired with every event from table B. Lots of new events.

So, as a next step, I decided to optimize it by creating bags instead of using one specific common value. Each entry in a bag will have the same dummy value, but entries from different bags will have different values. Instead of one common value, I need a function that can generate common values for the groups of IPs and ranges (bags).

We know that all IPs can be represented by a decimal number between 0 (0.0.0.0) and 4294967295 (255.55.255.255). As a first attempt I went with a query like this:

| extend dummy = round(IP1 / 10000000)) IP1 is the decimal representation of the lower IP of the range from the geolocation database. The same value is calculated for the IP from the other table.

| extend dummy = round(IP / 10000000)) If an IP is in the range of IP1 and IP2 (between IP1 and IP2) then we can be sure that the IP is always bigger or equal to IP1. Thus, rounding it down, IP is never going to be in a smaller bag than IP1.

Now, we only have to correlate logs from the tables with their respective bags but not with all the other entries. This significantly increases the performance, because we are going to generate way fewer events.

Picking the divider (10 million) randomly is not going to work for each range though. We know that IP (from the table) is not going to be in a lower bag than IP1 (lower range from geo database), but it can be in a higher one, which means our join based on bag numbers won’t cover everything.

To show this I use this example:

- 167772160 – 10.0.0.0

- 184549375 – 10.255.255.255

It is easy to see that the IP 10.0.0.0 (167,772,160) and a random IP 10.0.0.2 (167,772,162) are in the same bag (bag 16). And also, we know that IP 10.0.0.2 is in the range of 10.0.0.0-10.255.255.255. On the other hand, while the IP 10.200.200.200 (180,930,760) is also in the range it is going to be in a different bag (bag 18) than IP1. So, the join won’t work.

To solve this issue, I have to ensure that all IPs from a given range are assigned to the same bag. However, finding a divider for this is not always possible. It can happen anytime, that the lowest IP (IP1) and the highest IP (IP2) of the range are in a different bag, therefore not every IP within the range will fall into the same bag.

First, I decided to find the biggest difference in my database between an IP1 and IP2 of a range. The biggest difference I found was the last entry, namely the decimal 3758096384 and 4294967295. These numbers are the IP 224.0.0.0 and 255.255.255.255 respectively. This whole range is reserved therefore no geolocation data is assigned to it. I decided to exclude all reserved IP ranges because these are never going to have geolocation data mapped to them.

After this, I went through to find the biggest difference for non-reserved entries. Finding the biggest difference in CSV:

$CSV = Import-Csv -Path ".\smallgeo.CSV" -Header 'IP1', 'IP2', 'CC', 'Country', 'Area', 'City'

$max = 0

$CSV | ForEach-Object

{

$recent = $_.IP2 - $_.IP1

if ($recent -gt $max)

{

Write-Host $recent

Write-Host $max

Write-Host $_

$max = $recent

}

} After excluding the reserved IPs, the biggest difference was between IP 28.44.87.0 and 30.114.255.255. The difference in decimal is 38,185,215. So, it was almost 40 million. To use a nice round number, I decided to use the 40 million as the divider. However, this still does not ensure that a value from the range and the lower IP (IP1) will go into the same bag. But picking the biggest difference ensures that all the values from a range will be either in 1 bag or in 2 separate bags at the top.

Example:

IP1=75497472; IP2=83886079; CC=US; Country=United States of America; Area=Washington; City=Redmond

You can see that the floor of the first number divided by 40 million is 1, so it is in bag 1, while the second one is in bag 2. Every number until 79,999,999 goes to bag 1, while everything from 80,000,000 goes to bag 2 (from within the range). I am using the letter ‘Z’ to mark the number from where the IPs go to a different bag, 80 million in this case.

From this point on the whole thing is easy. I just went through my CSV file and modified it a little. I went through the whole database, and every time I found a range where the lower IP (IP1) and upper IP (IP2) were in a different bag I modified the file. I threw out the original entry and I created 2 new entries in its place.

New ranges:

- First range: From IP1 until the number at which the bags are changing (Z) -1

- Second range: From Z to IP2.

The location information is the same for the two. This way an IP that is in the range can be matched with the correct bag no matter what.

I used Z-1 as the highest IP in the first bag, and Z as the lowest IP in the second one. With this, I prevented an overlap between the ranges. But in reality, overlaps are not a problem here, because whichever range our IP is going to fall into it will still be tied to the same location at the end.

Let’s explain it through a simple example (oversimplified but hopefully clear):

We have the following ranges, and I also assigned a letter to them. I used letters to make it simple, but letters could be replaced by location information in a real-life scenario.

| Location | Range (IP1-IP2) | Numbers (IPs) in the range |

|---|---|---|

| A | 1-3 | 1,2,3 |

| B | 4-7 | 4,5,6,7 |

| C | 8-9 | 8,9 |

| D | 10-16 | 10, 11, 12, 13, 14, 15, 16 |

| E | 17-20 | 17, 18, 19, 20 |

As I did before, I find the highest difference between the lower end and the upper one of the ranges. Here, the biggest difference is between 10 and 16 and the difference is 6. Thus, we are using a bag of 6 and we map the bag number to the ranges with this function: floor(number/6)

- 1-3: this range is going to be bag 0.

- 4-7: this range does not fit into one bag, because 4 is still in bag 0 while 7 is going to be in bag one. This is because the floor of 7/6 is 1. So, I separate this range into two. One of them is a range from 4 to 5 and the other is from 6 to 7.

- 8-9: bag 1

- 10-16: 10-11 is still bag 1, 12-16 is bag 2, so again I separate them accordingly.

- 17-20: 17 is a bag 2 in itself (lower and upper range is the same), 18-20 is bag 3.

So, at the end we will have the following ranges and letter (location) mappings. These are the new entries after processing the database:

| Location | Range (IP1-IP2) | Bag |

|---|---|---|

| A | 1-3 | bag 0 |

| B | 3-5 | bag 0 |

| B | 6-7 | bag 1 |

| C | 8-9 | bag 1 |

| C | 10-11 | bag 1 |

| D | 12-16 | bag 2 |

| E | 17-17 | bag 2 |

| E | 18-20 | bag 3 |

Feel free to go through all the numbers and see that they are going to be assigned to the correct letters.

So, the other question is, how frequently do you have to modify an entry. For me, by using 40 million as bag size (divider) I found approximately 40 cases. 40 out of the 3 million entries in the CSV file. Not too bad. This means instead of the original approx. 3 million entries after the change we will have approximately 3 million + 40.

If you have a bigger max difference, you can either increase the bag size to something greater than 40 million, or you can just slice a range into more than 2 new ranges.

At this point, you can either modify the CSV file or your KQL query. In my opinion, modifying the CSV file with PowerShell (or anything else) is a much better way. Geo Location databases are rarely updated, typically once a week or so (commercial ones maybe more frequently). So, using a script once a week is better than executing a more complex KQL query constantly, hundreds of times a day. Also, you only must write the PowerShell script once, while you are constantly going to work on new queries and rules.

Powershell script:

$CSV = Import-Csv -Path ".\test.csv" -Header 'IP1', 'IP2', 'CC', 'Country', 'Area', 'City'

$temp_content = [ordered]@{}

$CSV | ForEach-Object{

$bag1 = [math]::Floor($_.IP1 / 40000000)

$bag2 = [math]::Floor($_.IP2 / 40000000)

if ($bag1 -ne $bag2)

{

$Z = [math]::Floor($_.IP2 / 40000000) * 40000000

$temp_content += [pscustomobject] @{IP1=$_.IP1; IP2=$Z-1 ; CC=$_.CC; Country=$_.Country; Area=$_.Area; City = $_.City}

$temp_content += [pscustomobject] @{IP1=$Z; IP2=$_.IP2 ; CC=$_.CC; Country=$_.Country; Area=$_.Area; City = $_.City}

}

$temp_content += [pscustomobject] @{IP1=$_.IP1; IP2=$_.IP2 ; CC=$_.CC; Country=$_.Country; Area=$_.Area; City = $_.City}

}

$temp_content | Export-CSV -Path .\small_output.csvSince I decided to modify the PowerShell code the KQL query can remain pretty simple:

let bagsize = 40000000; //define a bagsize, or use the one you calculated with the powershell script

let ipranges = externaldata(IP1:long, IP2:long, CC:string, Country:string, Area:string, City:string) [ @"<LINK_TO_THE_GEOLOCATION_CSV>"] with (format="csv");

let ipranges2 =

ipranges

| extend dummy = round(IP1 / bagsize);

LogsWithIPs

//this table has IPs in the ip field

| where TimeGenerated > ago(14d)

//the iif is here, so you can use both normal and decimal-formatted IPs

| extend ipv2 = iif(ip contains '.',tolong(split(ip,'.')[0])*16777216 + tolong(split(ip,'.')[1])*65536 + tolong(split(ip,'.')[2])*256 + tolong(split(ip,'.')[3]), tolong(ip))

| extend dummy = round(ipv2 / bagsize)

| join kind=leftouter ipranges2 on dummy

| where (ipv2 >= IP1 and ipv2 <= IP2) or isempty(IP1)Download the code from GitLab. Please be aware, the PowerShell code on GitLab is a modified and optmizied version of the script above. Also, it contains some additional features.

So, here it is. We have a solution, that covers every IP scenario, we don’t miss anything with it. It also has a way better performance. But how much did the performance improve?

| Method | Events in table | Query execution time |

|---|---|---|

| Cartesian product | 150 entries | 9:42 |

| Cartesian product | 200 entries | timeout |

| Using bags (40 million as divider) | 100k entries | <10 seconds |

This solution is definitely something you can use in production. But it also has some drawbacks.

The uploaded CSV database is approximately 200 MB. A lot of file storage solution charges you based on file read or egress traffic or at least they limit reading and download. Every rule, that uses this file is going to download/read the CSV during each execution. This can quickly add up and generate some costs. If you can store the data somewhere where this is acceptable, then you won’t have a problem with this.

When you process the file in PowerShell you have to load it into memory, which is again 200 MB. My intial code - the one you can find in this post - even went up to 5 GB of memory during its execution. The optimized one on GitLab is much better. And some of the serverless coding functions charge you based on memory. So again, this can introduce some unexpected costs. On the other hand, this processing only must be done a few times per week tops.

Update 11/02/2022: There is also an option in the externaldata operator to use zip files. This means instead of the raw 200 MB CSV file you can just upload a 30 MB zip file with the CSV in it, and Sentinel can still handle it. If you have a limit on downloads, you can use this to allow 10x as much download than you have with the normal CSV files. To do this, just zip the CSV file, upload it, and change the relevant code to this (format=“csv”, zipPattern="*.csv").

To be continued

Even though the solution above is a working - and might I say a well-working - one, there are still other options with their own benefits. I’m going to explore another way to solve the geolocation enrichment problem in my next blog post.

Until then and until MS does not provide a built-in way for IP geolocation enrichment, feel free to use the solution above.

Update 2022-05-27

I used this solution instead of the ipv4_lookup plugin because that plugin was really slow in case of huge IP database files. My database file contained around 3 million entries, while ipv4_lookup was designed to work well with smaller files like 100k entries. Also, ipv4_lookup does not work with IPv6 addresses.

Unfortunately, since then Microsoft has made some changes in Sentinel and now this query is not working anymore. Previously, this code finished in less than 10 seconds with 100k entries in a table and 3 million entries in my geo database. But now with way less entries in the table, it is terminated after 5-10 seconds of execution due to high resource usage.